Kubernetes 101

Overview

Kubernetes is an open source platform designed to schedule and manage containerized workloads across many hosts. The project was initially launched in 2015 by Google based on their own internal Borg system and is now maintained by the Cloud Native Computing Foundation (CNCF). Since it’s based on Borg which schedules over 2 billion containers per week, the architecture was built to scale and should be able to run your whole data center.

It’s important to realize that Kubernetes is very opinionated and won’t run just any old app. To run well, apps or workloads must be containerized and architectued following most of the Cloud Native tenants. The definition of Cloud Native is somewhat different depending on who you ask, but I think The New Stack gives a pretty good summary.

You need to take into account the different environment apps have inside a container compared to a very reliable server or VM. For example, containers will (and do) die and get rescheduled on an entirely different host. Data inside a container does not persist after the container lifetime without taking additional steps. And of course, connectivity to services for external users is much different than with legacy infrastructure.

That sounds complex, so why do we even need Kubernetes?

Kubernetes brings many benefits, like efficient use of resources, consistent delivery, app portability and automation. But by far, the primary reason you should adopt is to enable faster velocity for developers. Manual steps are the biggest thing that slows down getting features to production. And, Kubernetes is a platform to run containerized apps that can be built, deployed and scaled to meet dynamic load all without manual steps.

Kubernetes provides

- Scheduling and management of containers across all hosts in the cluster

- API’s for automating everything that happens in the cluster

- A standardized way to declare resource requirements, such as CPU, memory, networking & storage

- Efficient utilization of infrastructure capacity

High Level Architecture

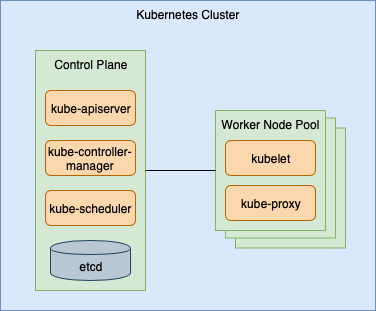

Similar to other resource schedulers, at the highest level the architecture consists of a control plane and a pool of capacity to run applications. The control plane is a set of services, “Master Components”, that run on hosts that are often called “Master Nodes”. They are responsible for knowing the total capacity of the pool, how much has been allocated and facilitate scheduling containers in the pool. This process is often called resource scheduling or orchestration.

The primary Master Components include

etcd- a key value store that maintains the state of the clusterkube-apiserver- provides an API external to the cluster for tools and automationkube-scheduler- scheduling engine that tracks capacity and determines where to run containerskube-controller-manager- manages the collection of controllers that manage the Kubernetes environment - one example isReplicationControllerthat manages instances of containers

The control plane talks to a set of services, “Node Components”. These services run on hosts, often called “Worker Nodes”, that provide capacity to run the app containers or workloads. Node Components are responsible for executing the allocation and container operations as directed by the control plane.

The main Node Components include

kubelet- the control plane talks to this service to request state changes on its hostkube-proxy- facilitates network rules and connection forwarding on the host

At the highest level, the architecture looks like this

Beyond scheduling, Kubernetes also provides a wealth of building blocks to help you deploy and run workloads. Some examples are

- Resource allocation and management

- Internal service discovery

- Network scaffolding

- Storage provisioning

- Secrets and configuration storage

Kubernetes brings it all together in a standardized definition language based on YAML. It’s a declarative specification for the cluster state you want. The control plane takes the specification and makes changes to arrive at the desired state.

The control plane also continues to monitor the cluster state and adjust, which is great when an app unexpectedly crashes. The control plane sees the state is missing an instance and automatically runs a new one to replace it.

Conclusion

In summary, Kubernetes is a platform to run container workloads that comes with a long list of benefits. When used properly, it enables developers to achieve a faster release velocity and makes apps more resilient. In future posts, I’ll go deeper into the components and how to get the most out of the platform.